Innovation and Technology

Microsoft Invests $300 Million in Datacenters

Microsoft Invests $290 Million in South Africa’s AI and Cloud Infrastructure

Microsoft Announces Significant Investment in South Africa

Microsoft has announced a significant investment of $290 million over the next two years in South Africa’s AI and cloud infrastructure. The investment was announced by Microsoft’s vice chair and president, Brad Smith, at an event with South African President Cyril Ramaphosa in Johannesburg.

Investment to Help South Africa Become a Globally Competitive AI Economy

Smith emphasized that the investment will help South Africa’s ambition to become a globally competitive AI economy. The country has already seen significant growth in the technology sector, with Microsoft building massive data centers in Johannesburg and Cape Town, a $1.1 billion investment.

Certification Process for Young South Africans

Microsoft is also paying for the certification process for 50,000 young South Africans, enabling them to gain qualifications for their digital skills studies. This is part of the company’s plan to provide education to Africa’s youngsters and provide them with certification for their skills, helping them to get a job.

High-Demand Skills in AI, Data Science, Cybersecurity, and Cloud Solution Architecture

The high-demand skills include AI, data science, cybersecurity analysis, and cloud solution architecture. According to Smith, these are precisely the certificates and skills that win people jobs.

Paying for Education

Microsoft is paying for people to get the training and take the certification exams. "We’re in effect paying for people so they can get the training and take the certification exams," Smith said. With a Microsoft certificate for something like cloud architecture or cyber security or AI, you’re going to be able to get a job."

Private-Public Partnership with YES

This private-public partnership with South Africa’s Youth Employment Service (YES) has 1,800 corporate partners and has trained 177,000 people so far. The organization takes talented youth from disadvantaged backgrounds and puts them in their first job.

Conclusion

Microsoft’s significant investment in South Africa’s AI and cloud infrastructure is a testament to the company’s commitment to empowering the youth and promoting economic growth in the region. The investment will help create jobs, stimulate economic growth, and make South Africa a globally competitive AI economy.

FAQs

Q: What is the size of Microsoft’s investment in South Africa’s AI and cloud infrastructure?

A: The investment is $290 million over the next two years.

Q: What is the purpose of the investment?

A: The investment will help South Africa’s ambition to become a globally competitive AI economy.

Q: What are the high-demand skills included in the certification process?

A: The high-demand skills include AI, data science, cybersecurity analysis, and cloud solution architecture.

Q: Why is Microsoft paying for the certification process?

A: Microsoft is paying for people to get the training and take the certification exams, helping them to get a job.

Innovation and Technology

AMD Unveils MI350 GPU And Roadmap

Introduction to AMD’s Advancing AI Event

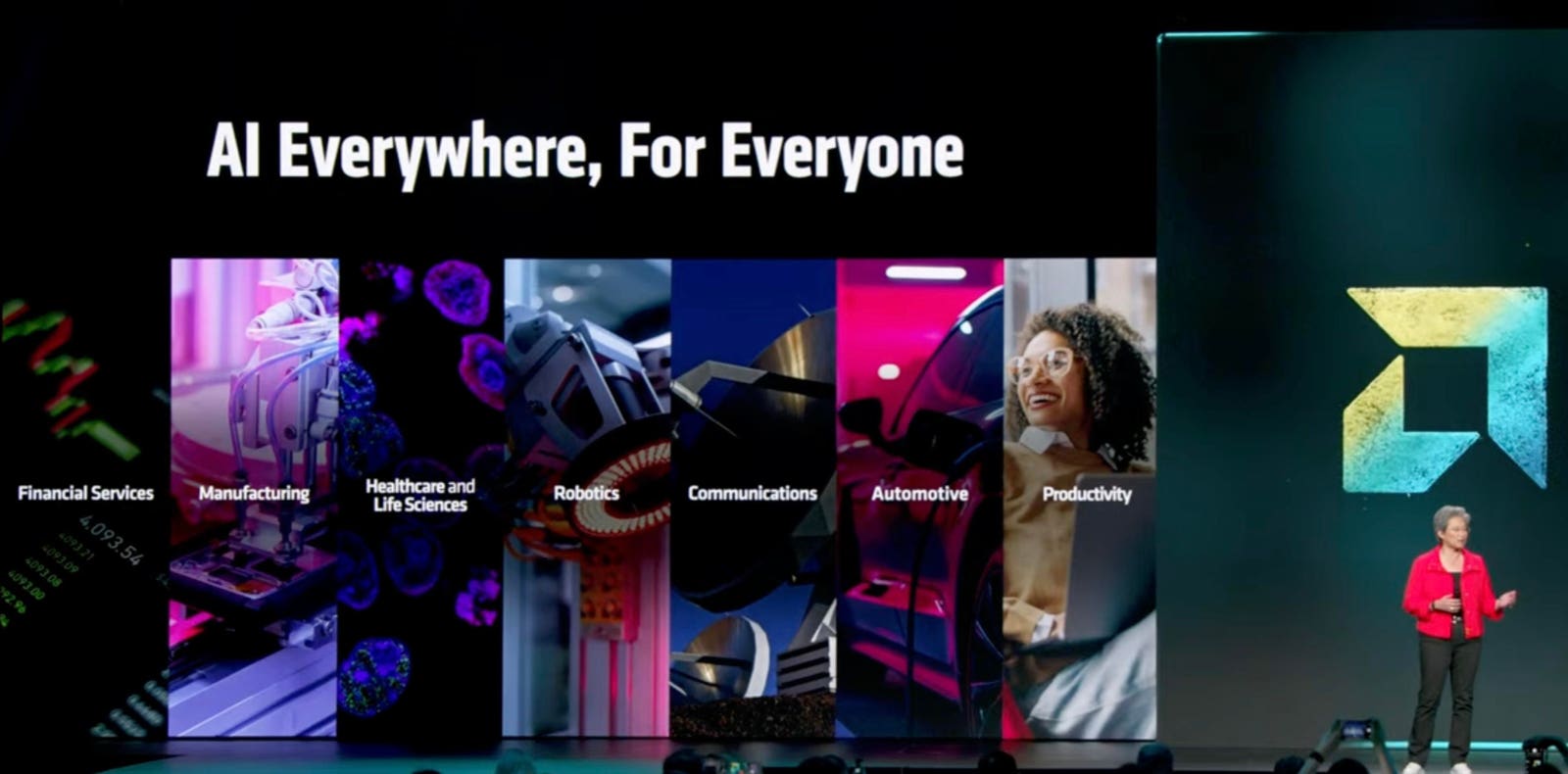

AMD held their now-annual Advancing AI event today in Silicon Valley, with new GPUs, new networking, new software, and even a rack-scale architecture for 2026/27 to better compete with the Nvidia NVL72 that is taking the AI world by storm. The event was kicked off by Dr. Lisa Su, Chairman and CEO of AMD.

Net-Net Conclusions: AMD Is Catching Up

While AMD has yet to achieve investor expectations, and its products remain a distant second to Nvidia, AMD continues to keep to its commitment to an annual accelerator roadmap, delivering nearly four times better performance gen-on-gen with the MI350. That pace could help it catch up to Nvidia on GPU performance, and keeps it ahead of Nvidia regarding memory capacity and bandwidth, although Nvidia’s lead in networking, system design, AI software, and ecosystem remains intact.

However, AMD has stepped up its networking game with support for UltraEthernet this year and UALink next year for scale-out and scale-up, respectively. And, for the first time, AMD showed a 2026/27 roadmap with the “Helios” rack-scale AI system that helps somewhat versus Nvidia NVL72 and the upcoming Kyber rack-scale system. At least AMD is now on the playing field.

Oracle said they are standing up a 27,000 GPU cluster using AMD Instinct GPUs on Oracle Cloud Compute Infrastructure, so AMD is definitely gaining traction. AMD also unveiled ROCm 7.0 and the AMD Developer Cloud Access Program, helping it build a larger and stronger AI ecosystem.

The AMD MI350Series GPUs

The AMD Instinct GPU portfolio has struggled to catch up with Nvidia, but customers value the price/performance and openness of AMD. In fact, AMD claims to offer 40% more tokens per dollar, and that 7 of the 10 largest AI companies have adopted AMD GPUs, among over 60 named customers.

The biggest claim to fame AMD touts is the larger memory footprint it supports, now at 288 GB of HBM3 memory with the MI350. That’s enough memory to hold today’s larger models, up to 520B parameters, on a single node, and 60% more than the competition. That translates to lower TCO for many models. The MI350 also has twice the 64-bit floating point performance versus Nvidia, important for HPC workloads.

The MI355 is the same silicon as the MI300 but is selected to run faster and hotter, and is AMD’s flagship data center GPU. Both GPUs are available on the UBB8 industry standard boards in both air- and liquid cooled versions.

AMD claims, and has finally demonstrated through MLPerf benchmarks, that the MI355 is roughly three times faster than the MI300, and even on par with the Nvidia B200 GPU from Nvidia. But keep in mind that Nvidia NVLink, InfiniBand, system design, ecosystem, and software keep it in a leadership position for AI, while the B300 will begin shipment soon.

AMD’s GPU Roadmap Becomes More Clear

AMD added some detail on next year’s MI400 series as well. Sam Altman himself appeared on stage and gave the MI450 some serious love. His company has been instrumental in laying out the market requirements to the AMD engineering teams.

The MI400 will use HBM4 at 423GB per GPU, as well as supporting 300GB/s UltraEthernet through Pensando NICs.

To put the MI400 performance into perspective, check out the hockey stick performance they are expecting in the graph below. This reminds us of a similar slide Jensen Huang used at GTC. Clearly, AMD is on the right path.

Networking: AMD’s Missing Link

While a lot of attention in the AMD Advancing AI event surrounded the MI350/355 GPUs and the roadmap, the networking section was more exciting and important.

More important to large-scale AI, AMD is an original member of the UALink consortium, and will support UALink with the MI400 series. While the slide below makes it look amazing, keep in mind that Nvidia will likely be shipping NVLink 6.0 in the same timeframe, or earlier.

AMD ROCm Might Actually Start to Rock!

Finally, let’s give ROCm some credit. The development team has been hard at work since the Silicon Analysis crushed the AI software stack late last year, and they have some good performance results to show for it as well as ecosystem adoption.

To demonstrate the performance point, AMD showed over three times the performance for inference processing using ROCm 7. This is in part due to the ever-improving state of the open AI stack such as Triton from OpenAI, and is a developing trend that will keep Nvidia on its toes.

Conclusion

In conclusion, AMD’s Advancing AI event showed that the company is committed to catching up with Nvidia in the AI space. With its new GPUs, improved networking, and enhanced software, AMD is making significant strides in the industry. While Nvidia still maintains a leadership position, AMD’s efforts are helping to close the gap.

FAQs

Q: What was the main focus of AMD’s Advancing AI event?

A: The main focus of AMD’s Advancing AI event was to showcase the company’s new GPUs, improved networking, and enhanced software, as well as its commitment to catching up with Nvidia in the AI space.

Q: What is the MI350 and how does it compare to Nvidia’s GPUs?

A: The MI350 is AMD’s new GPU that offers 288 GB of HBM3 memory and twice the 64-bit floating point performance versus Nvidia. While it still lags behind Nvidia’s GPUs in some areas, it provides a competitive alternative with its larger memory footprint and lower TCO.

Q: What is AMD’s GPU roadmap for the future?

A: AMD’s GPU roadmap includes the MI400 series, which will use HBM4 at 423GB per GPU and support 300GB/s UltraEthernet through Pensando NICs. The company is also working on a rack-scale AI system called "Helios" for 2026/27.

Q: How does AMD’s ROCm software stack compare to Nvidia’s?

A: AMD’s ROCm software stack has improved significantly over the last two years and has seen broad ecosystem collaboration. While Nvidia’s software stack is still more comprehensive, AMD’s ROCm is becoming a more viable alternative with its improved performance and openness.

Innovation and Technology

Digital Storage and AI

Introduction to Data Centers

In this article we will look at some recent announcements on digital storage and its use in AI training and inference. But first, an example of digital storage technology used to save humanity.

Data Storage Saves the Day

Digital archiving startup SPhotonix’s 5D memory crystal was an important element in the plot of the latest Mission Impossible movie. The 360TB memory crystal was used to stop a rogue AI from destroying the world. In practice, SPhotonix stores data using a FemtoEtch nano-etching technology on a 5-inch glass substrate. Note that I am an advisor for SPhotonix.

SPhotonix 5D Memory Cystal

Digital storage technologies have been used in many movies and TV shows over the years, such as the StorageTek Tape library used in the 1994 Film “Clear and Present Danger.”

Hybrid AI Data Centers

In practice data centers are generally using SSDs as primary storage in data centers, including for AI training applications. SSDs provide fast storage for refreshing data on the high bandwidth memory located close to the GPUs that directly support data processing. However, the cost for storing data on SSDs in data centers is about 6X higher than storing it on HDDs.

This leads data centers to use HDDs for storing colder but useful data in a hierarchical storage environment. Data is moved back and forth from various storage technologies to optimize the balance of cost versus performance. Ultimately archived information in data centers that is not frequently used is kept on magnetic tape cartridges or optical storage.

Recent Developments in Hybrid Storage

Vdura, formerly veteran storage company, Panasas, recently announced a white paper on digital storage for AI workloads and announced changes in their hybrid SSD and HDD storage offering to support HPC and AI workloads. The company is now offering QLC NAND flash SSDs combined with high-capacity HDDs with their global namespace parallel file system combined with object storage, offering multi-level erasure coding and fast key value storage. The image below shows the layout of this hybrid SSD and HDD storage system.

Vdura Global Namespace Storage

The Vdura Data Platform V11.2 includes a preview of V-ScaleFlow that enables data movement across QLC flash and high-capacity hard drives. This allows resource utilization, maximizes system throughput and provides efficient AI-scale workloads. In particular the company is using Phison Pascari 128TB QLC NVMe SSD with 30+TB HDDs to reduce flash capacity requirements by over 50% and lowing power consumption. Overall total cost of ownership is said to be reduced by up to 60%.

AI Data Pipeline and Storage Requirements

The Vdura white paper goes into details on data storage and memory utilization in an AI application. The figure below shows an AI data pipeline which should have the storage system enable minimum GPU downtime.

AI Data Pipeline

The table below goes into detail on read, write, performance and data size requirements for various elements in an AI workload. These various elements can require from GBs to PBs of digital storage with various performance requirements. This favors a combination of storage technologies to support different elements in this workload.

Element Characteristics in an AI Workflow

The below image shows a sample storage node that can provide all-flash or hybrid SSD and HDD storage to support AI and HPC workloads with a global namespace and a common control and data plane.

Vdura Storage Node

Conclusion

Digital storage technology saved the world from a rogue AI in the latest Mission Impossible Movie. Combining SSDs and HDDs can enable modern AI workloads that optimize cost and performance.

FAQs

Q: What is the main challenge in using digital storage for AI workloads?

A: The main challenge is balancing cost and performance, as storing data on SSDs can be expensive, while using HDDs may not provide the necessary performance.

Q: What is the role of hybrid storage in AI data centers?

A: Hybrid storage combines the benefits of SSDs and HDDs to provide a balance between cost and performance, enabling efficient AI-scale workloads.

Q: What is the significance of Vdura’s recent announcement?

A: Vdura’s announcement introduces a new hybrid SSD and HDD storage offering that supports HPC and AI workloads, providing a global namespace parallel file system and object storage with multi-level erasure coding and fast key value storage.

Innovation and Technology

The Missing Piece in Competitive Strategy

Introduction to Location Intelligence

A major brewery had a new idea for how to find new customers, create new buzz, and build new loyalty. They had big-time marketing, distribution into stores large and small, and strong relationships with customers, eateries, and bars. What they wanted to do was create their own branded pubs, but they needed decisive insight and intelligence about their own business. So, they mapped the popularity of craft beers by neighborhood, studied nighttime traffic patterns, and added information on the density and appeal of other restaurants and bars.

The Power of "Where"

They looked at dining-out behavior and spending, where it was rising or declining by category, and areas that were expanding, teasing out places where income might increase and demographics were shifting to match their target groups. They created their own business intelligence portfolio around the brewery idea, built on a single quality: Location. Business intelligence that melds internal customer and operational data with external data of every sort. The question they were trying to answer was, "Where would people be most interested in these new brew pubs?" and "Where do the demographics, values, behaviors, and preferences match the new community the brewer is hoping to tap?"

The Importance of Location in Business

“Where?” is really the question of the moment, whether you’re in construction or energy, consumer goods or retail, restaurants or banking. “Where” questions are incredibly potent—they unlock growth, efficiencies, and innovation. Oddly, though, location is the one thing most often missing from strategic planning, analysis, business intelligence, and operations. Leaving out location means missing chances for efficiency in operations and supply chain, reducing risk, improving marketing effectiveness, and increasing adaptability in an uncertain business environment.

The Cost of Missing Location Intelligence

Leaving out location means missing the chance to grow existing customers in unexpected ways and to find new customers and markets. You’re missing opportunity. Location isn’t about where you are, it’s about where you’re going. There are tools to bring location intelligence right into existing business intelligence platforms, exponentially enriching their analytical power. We’re talking about being able to make the invisible visible—patterns, perils, possibilities—and to map the future.

Mapping and Spatial Analytics

This approach has a name: mapping and spatial analytics. It’s making sure you’re applying “where” to basically every question and analysis your company undertakes. It’s weaving spatial intelligence into all the other kinds of intelligence analysis you already do. Once you start using it, ‘where’ becomes not just intuitive, it becomes instantly compelling. You start asking, in every setting, how does location fit into this? What about “the where”? Two things are key: ‘spatial analytics’ are easy to use—the complexity is under the hood. And as you’ll quickly see, it provides a striking competitive advantage.

Real-World Applications of Mapping and Spatial Analytics

Here are a few examples of how different industries are using mapping and spatial analytics:

- Retail: The third largest fast-food chain in the U.S. by sales uses location data for everything from site selection and drive-through optimization to supply chain risk management and competitive intelligence.

- Consumer goods: One of the world’s largest apparel companies uses mapping and analytics to trace its supply chain across 40 countries and compress six-month reporting tasks into days or weeks.

- Logistics and transportation: One of America’s largest transportation services companies uses mapping and spatial analytics to assess where its customers are, where its trucks are, and where its service depots are.

- Banking: A major bank serving the Southeast and Midwest U.S. uses mapping and analytics to figure out where it should grow and expand its services.

The Value of Location Intelligence

Not all locations are equal. In business, we know this intuitively—not all outlets do the same volume, not all neighborhoods have the same growth prospects, not all communities face the same kinds of extreme weather risk or climate opportunity. Location is not some niche quality anymore; it’s a kind of master key that unlocks all the other elements of business intelligence in ways that are revealing, creative, and energizing.

Conclusion

Location intelligence is a powerful tool that can help businesses make informed decisions, reduce risk, and increase efficiency. By applying “where” to every question and analysis, companies can unlock growth, innovation, and competitive advantage. With the right tools and techniques, businesses can make the invisible visible, map the future, and thrive in a dynamic and uncertain environment.

FAQs

- What is location intelligence?: Location intelligence refers to the process of using geographic data and spatial analysis to gain insights and make informed decisions.

- How can location intelligence be used in business?: Location intelligence can be used in a variety of ways, including site selection, supply chain optimization, risk management, and competitive intelligence.

- What are the benefits of using location intelligence?: The benefits of using location intelligence include increased efficiency, reduced risk, and improved decision-making.

- What tools and techniques are used in location intelligence?: The tools and techniques used in location intelligence include geographic information systems (GIS), spatial analysis, and mapping and spatial analytics software.

- How can I get started with location intelligence?: To get started with location intelligence, you can begin by identifying your business needs and goals, and then exploring the various tools and techniques available to help you achieve them.

-

Career Advice6 months ago

Career Advice6 months agoInterview with Dr. Kristy K. Taylor, WORxK Global News Magazine Founder

-

Diversity and Inclusion (DEIA)6 months ago

Diversity and Inclusion (DEIA)6 months agoSarah Herrlinger Talks AirPods Pro Hearing Aid

-

Career Advice6 months ago

Career Advice6 months agoNetWork Your Way to Success: Top Tips for Maximizing Your Professional Network

-

Changemaker Interviews5 months ago

Changemaker Interviews5 months agoUnlocking Human Potential: Kim Groshek’s Journey to Transforming Leadership and Stress Resilience

-

Diversity and Inclusion (DEIA)6 months ago

Diversity and Inclusion (DEIA)6 months agoThe Power of Belonging: Why Feeling Accepted Matters in the Workplace

-

Global Trends and Politics6 months ago

Global Trends and Politics6 months agoHealth-care stocks fall after Warren PBM bill, Brian Thompson shooting

-

Global Trends and Politics6 months ago

Global Trends and Politics6 months agoUnionization Goes Mainstream: How the Changing Workforce is Driving Demand for Collective Bargaining

-

Training and Development6 months ago

Training and Development6 months agoLevel Up: How Upskilling Can Help You Stay Ahead of the Curve in a Rapidly Changing Industry